Securing AI Supply Chains: Preventing Data Poisoning and Adversarial Attacks

- Shiksha ROY

- Oct 22

- 4 min read

SHIKSHA ROY | DATE: JANUARY 31, 2025

In the rapidly evolving landscape of Artificial Intelligence (AI), the integrity and security of AI systems have become paramount. As AI continues to integrate into critical sectors such as healthcare, finance, and national security, the potential risks associated with compromised AI supply chains have grown exponentially. Among these risks, data poisoning and adversarial attacks stand out as significant threats that can undermine the reliability and trustworthiness of AI models. Data poisoning involves the deliberate manipulation of training data to corrupt AI models, while adversarial attacks exploit vulnerabilities in AI algorithms to deceive them into making erroneous decisions. This article delves into the intricacies of securing AI supply chains, highlighting the importance of robust security measures to prevent these malicious activities and ensure the safe deployment of AI technologies.

Understanding AI Supply Chain Security

What is an AI Supply Chain?

AI supply chain security refers to safeguarding the entire lifecycle of AI models, from data collection to deployment. This involves protecting data sources, model training processes, and distribution channels from malicious interventions. Ensuring security in this domain requires a multi-layered approach that integrates robust cybersecurity practices with AI-specific defenses.

Importance of Securing AI Supply Chains

Securing AI supply chains is crucial because any compromise can lead to significant consequences, such as incorrect decision-making, financial losses, and reputational damage. Ensuring the integrity and reliability of AI systems is paramount for maintaining trust and achieving desired outcomes.

Threats to AI Supply Chains

Data Poisoning Attacks

Data poisoning occurs when adversaries manipulate training data to introduce biases, degrade performance, or embed backdoors into AI models. These attacks can occur at various stages, such as during data collection, preprocessing, or even at third-party data repositories. The consequences can be severe, leading to inaccurate predictions, security vulnerabilities, and ethical concerns. Different types of Data Poisoning Attacks are:

Label Flipping:

This involves changing the labels of training data, causing the model to misclassify inputs.

Backdoor Attacks:

Attackers introduce hidden triggers in the training data that activate malicious behavior in the AI model during deployment.

Flooding Attacks:

Overwhelming the AI system with benign data to normalize certain patterns, allowing malicious data to slip through undetected.

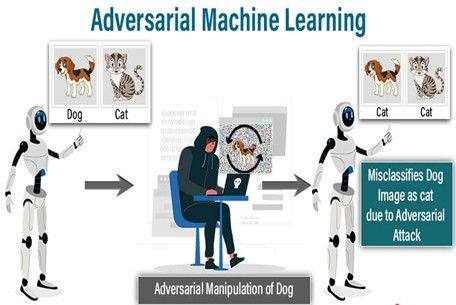

Adversarial Attacks

Adversarial attacks involve crafting deceptive inputs designed to mislead AI models. These attacks exploit the model’s weaknesses, causing misclassifications or incorrect outputs. Common methods include:

Evasion Attacks:

Altering input data to fool AI systems (e.g., modifying images slightly to deceive facial recognition systems).

Model Extraction Attacks:

Reverse-engineering AI models to uncover vulnerabilities.

Trojan Attacks:

Embedding hidden triggers in AI models that activate under specific conditions.

Strategies to Secure AI Supply Chains

Ensuring Data Integrity and Authenticity

To mitigate data poisoning, organizations must implement strict data validation and provenance tracking mechanisms. Best practices include using cryptographic hashing to verify data integrity, employing anomaly detection algorithms to identify poisoned data and implementing strict access controls to prevent unauthorized data modifications.

Robust Model Training Practices

Securing the AI model training process is crucial in preventing adversarial manipulation. Key measures include:

Differential Privacy:

Ensuring individual data points do not significantly influence model outcomes, reducing susceptibility to attacks.

Adversarial Training:

Exposing models to adversarial examples during training to improve resilience.

Federated Learning:

Distributing training across multiple secure nodes to reduce centralized risks.

Strengthening AI Model Deployment and Monitoring

Once trained, AI models must be deployed securely and continuously monitored to detect anomalies. Essential steps include:

Runtime Monitoring:

Deploying AI security tools to detect real-time adversarial attempts.

Model Watermarking:

Embedding unique identifiers in AI models to track and validate authenticity.

Access Control Measures:

Restricting unauthorized access to AI models and APIs.

Collaboration and Standardization

Securing AI supply chains requires collaboration among stakeholders, including developers, security researchers, and policymakers. Organizations should follow established cybersecurity frameworks (e.g., NIST, ISO 27001), engage in information sharing with industry peers to address emerging threats and advocate for regulatory compliance to establish standardized security practices.

Conclusion

Securing AI supply chains is a critical endeavor in the modern technological landscape. As AI systems become increasingly integral to various sectors, the potential risks posed by data poisoning and adversarial attacks cannot be overlooked. These threats have the potential to compromise the integrity, reliability, and trustworthiness of AI models, leading to significant consequences. By implementing robust security measures, such as data validation, anomaly detection, secure data sourcing, and comprehensive access controls, organizations can effectively mitigate these risks. Additionally, adopting advanced techniques like robust model training and centralized control planes further enhances the resilience of AI systems. Ultimately, a proactive and comprehensive approach to securing AI supply chains is essential for ensuring the safe and reliable deployment of AI technologies, thereby fostering trust and confidence in their use across critical applications.

Citations

Outshift | How to detect and mitigate AI data poisoning. (n.d.). Outshift by Cisco. https://outshift.cisco.com/blog/ai-data-poisoning-detect-mitigate?utm

What is Data Poisoning? Types & Best Practices. (2025, January 16). SentinelOne. https://www.sentinelone.com/cybersecurity-101/cybersecurity/data-poisoning/?utm

| CXO Revolutionaries. (n.d.). https://www.zscaler.com/cxorevolutionaries/insights/ai-software-supply-chain-risks-prompt-new-corporate-diligence?utm

What is data poisoning? | CrowdStrike. (n.d.). https://www.crowdstrike.com/en-us/cybersecurity-101/cyberattacks/data-poisoning/?utm

Image Citations

Defending the future: a guide to fortifying AI against data poisoning attacks. (n.d.). https://www.glasswall.com/blog/defending-the-future-a-guide-to-fortifying-ai-against-data-poisoning-attacks

Kumar, G. (2024, February 27). Adversarial machine learning. EDUCBA. https://www.educba.com/adversarial-machine-learning/

Duckworth, N. (2020, June 30). Unlocking the Value in Your Supply Chain Network with Artificial Intelligence - The Network Effect. The Network Effect. https://supplychainbeyond.com/unlocking-the-value-in-your-supply-chain-network/

Comments